Section: New Results

Cultural Heritage

VR and AR tools for cultural heritage

EvoluSon: Walking Through an Interactive History of Music

Participants: Ronan Gaugne, Florian Nouviale and Valérie Gouranton

The EvoluSon project [4] proposes an immersive experience where the spectator explores an interactive visual and musical representation of the main periods of the history of Western music. The musical content is constituted of original musical compositions based on the theme of Bach's Art of Fugue to illustrate eight main musical eras from Antiquity to the contemporary epoch. The EvoluSon project contributes at the same time to the usage of VR for intangible culture representation and to interactive digital art that puts the user at the centre of the experience. The EvoluSon project focuses on music through a presentation of the history of Western music, and uses virtual reality to valorise the different pieces through the ages. The user is immersed in a coherent visual and sound environment and can interact with both modalities (see Figure 16).

This project was done in collaboration with the Research Laboratory on Art and Music of University Rennes 2.

Immersive Point Cloud Manipulation for Cultural Heritage Documentation

Participants: Jean-Baptiste Barreau, Ronan Gaugne and Valérie Gouranton

Virtual reality combined with 3D digitisation allows to immerse archaeologists in 1:1 copies of monuments and sites. However, scientific communication of archaeologists is based on 2D representations of the monuments they study. In [2] we proposed a virtual reality environment with an innovative cutting-plan tool to dynamically produce 2D cuts of digitized monuments. A point cloud is the basic raw data obtained when digitizing cultural heritage sites or monuments with laser scans or photogrammetry. These data represent a rich and faithful record provided that they have adequate tools to exploit them. Their current analyses and visualizations on PC require software skills and can create ambiguities regarding the architectural dimensions. We conceived a toolbox to explore and manipulate such data in an immersive environment, and to dynamically generate 2D cutting planes usable for cultural heritage documentation and reporting (see Figure 17).

|

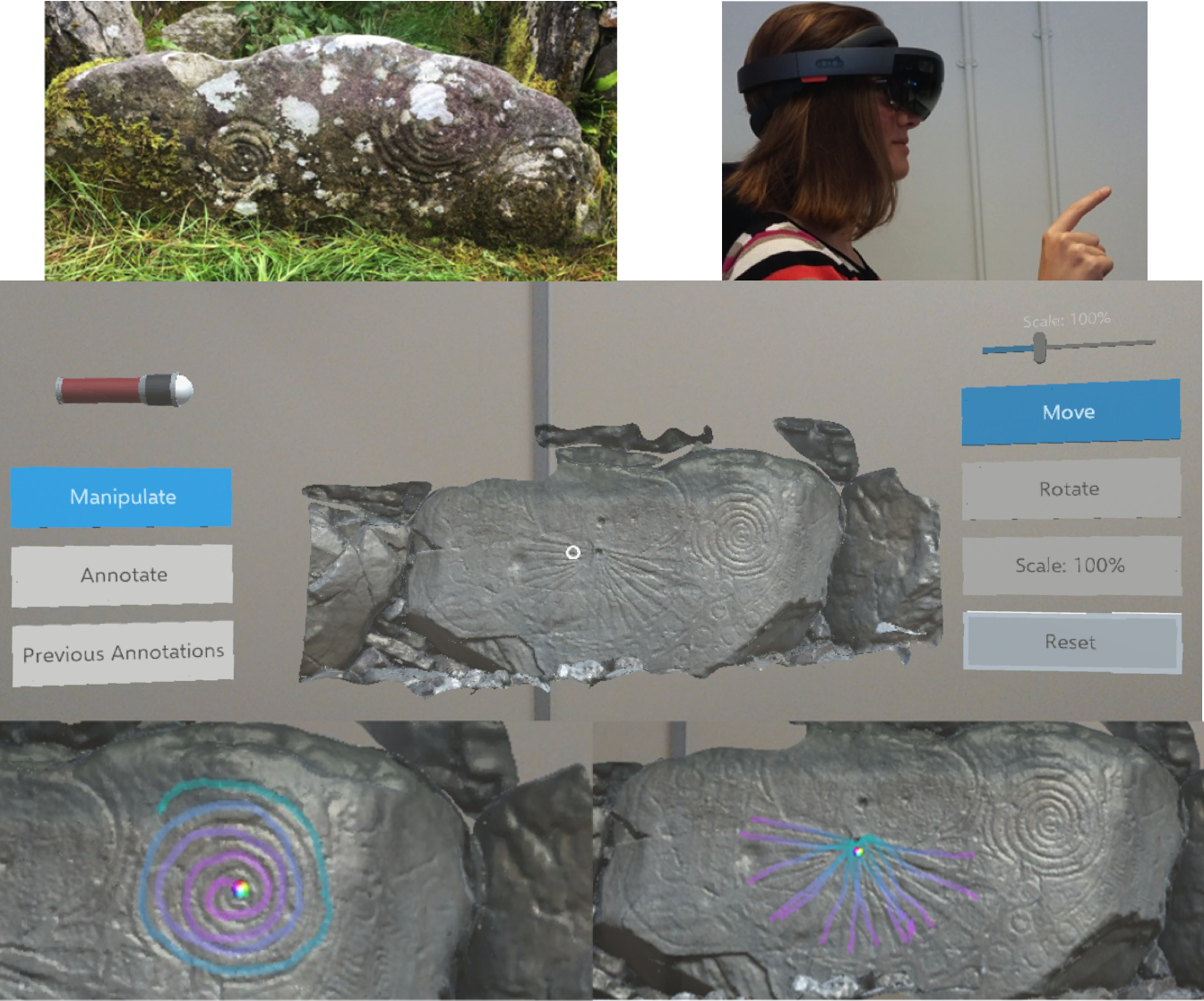

MAAP Annotate: When Archaeology meets Augmented Reality for Annotation of Megalithic Art

Participants: Jean-Marie Normand

Megalithic art is a spectacular form of symbolic representation found on prehistoric monuments. Carved by Europe's first farmers, this art allows an insight into the creativity and vision of prehistoric communities. As examples of this art continue to fade, it is increasingly important to document and study these symbols. In [12] we introduced MAAP Annotate, a Mixed Reality annotation tool from the Megalithic Art Analysis Project (MAAP). It provides an innovative method of interacting with megalithic art, combining cross-disciplinary research in digital heritage, 3D scanning and imaging, and augmented reality. The development of the tool is described, alongside the results of an evaluation carried out on a group of archaeologists from University College Dublin, Ireland. It is hoped that such tools will enable archaeologists to collaborate worldwide, and non-specialists to experience and learn about megalithic art (see Figure 18).

This work was done in collaboration with the School of Computer Science and Informatics and the School of Archaeology of University College Dublin (UCD).

|

Multi-modal images and 3D printing for cultural heritage

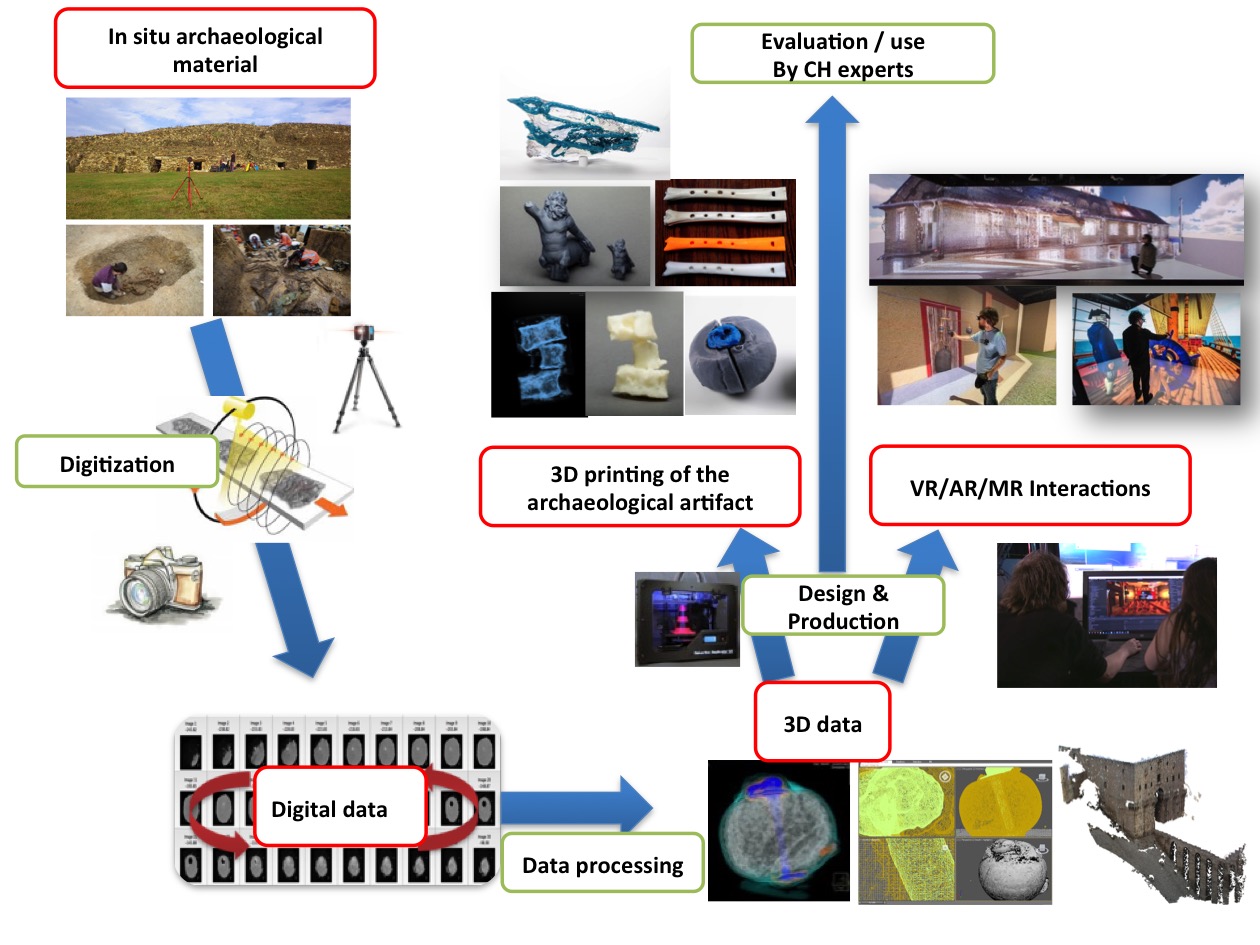

Physical Digital Access Inside Archaeological Material

Participants: Théophane Nicolas, Ronan Gaugne and Valérie Gouranton

Traditionally, accessing the interior of an artefact or an archaeological material is a destructive activity. We proposed an alternative non-destructive technique, based on a combination of medical imaging and advanced transparent 3D printing. Our approach proposes combining a computed tomography (CT) scan and advanced 3D printing to generate a physical representation of an archaeological artefact or material [7]. This project is conducted with archaeologists from Inrap and computer scientists from Inria-IRISA. The goal of the project is to propose innovative practices, methods and tools for archaeology based on 3D digital techniques. We notably proposed a workflow (see Figure 19) where the CT scan images are used to produce volume and surface 3D data which serve as a basis for new evidence usable by archaeologists. This new evidence can be observed in interactive 3D digital environments or through physical copies of internal elements of the original material.

This work was done in collaboration with Inrap.

|

Combining CT-scan, Photogrammetry, 3D Printing and Mixed Reality

Participants: Théophane Nicolas, Ronan Gaugne, Bruno Arnaldi and Valérie Gouranton

Archaeological artefacts and the sediments that contain them constitute the sometimes tenuous evidence that requires analysis, preservation and showcasing. Different methods of digital analysis that provide non destructive solutions to preserve, analyse and showcase archaeological heritage have been developed over recent years. However these techniques are often restricted to the visible surface of the objects, monuments or sites. The techniques used in medical imaging are more and more frequently used in archaeology as they give non destructive access to the artefacts' internal and often fragile structure. This use is mostly limited to a simple visualisation. The information obtained by CT-scan is transcribed in a visual manner and its inherent detail can be used much more widely in the domain of the latest 3D technologies such as virtual reality, augmented reality, multimodal interactions and additive manufacturing. In combining these medical imaging techniques, it becomes possible to identify and scientifically analyse by efficient and non destructive methods non visible objects, to assess their fragility and their state of preservation. It is also possible to assess the restoration of a corroded artefact, to visualise, to analyse and to physically manipulate an inaccessible or fragile object (CT, 3D printing) and to observe the context of our hidden archaeological heritage (virtual reality, augmented reality or mixed, 3D). The development of digital technologies will hopefully lead to a democratisation of this type of analysis. We could illustrate our approach using the study of several artefacts from the recent excavation of the Warcq chariot burial (Ardennes, France). We notably presented in [29] a physical interaction with inaccessible objects based on a transparent 3D printing of a horse's cranium (see Figure 20).

This work was done in collaboration with Inrap, Image ET and University Paris 1.

|

A Multimodal Digital Analysis of a Mesolithic Clavicle: Preserving and Studying the Oldest Human Bone in Brittany

Participants: Jean-Baptiste Barreau, Ronan Gaugne and Valérie Gouranton

The oldest human bone of Brittany was dug up from the mesolithic shell midden of Beg-er-Vil in Quiberon and dated about 8200 years. The low acid soils of these dump area represent exceptional sedimentary conditions. For these reasons, but also because these bones have a very particular patrimonial and symbolic value, their study goes altogether with concerns of conservation and museographic presentation. The clavicle is constituted of two pieces discovered separately at a one meter distance from each other. The two pieces match, so it can be assemble in a single fragment of approximately 7 centimeters. Cut-marks are clearly visible on the surface of these bones. They are bound to post- mortem processing which it is necessary to better qualify. The clavicle was studied through a process that combines advanced 3D image acquisition, 3D processing, and 3D printing with the goal to provide relevant support for the experts involved [25]. The bones were first scanned with a CT scan, and digitized with photogrammetry in order to get a high quality textured model. The CT scan appeared to be insufficient for a detailed analysis. The study was thus completed with a μCT providing a very accurate 3D model of the bone. Several 3D-printed copies of the collarbone were produced in order to constitute tangible support easy to annotate for sharing between the experts involved in the study. The 3D models generated from μCT and photogrammetry, were combined to provide an accurate and detailed 3D model. This model was used to study desquamation and the different cut marks. These cut marks were also studied with traditional binoculars and digital microscopy. This last technique allowed characterizing the cut marks, revealing a probable meat cutting process with a flint tool (see Figure 21). This work of crossed analyses allowed to document a fundamental patrimonial piece, and to ensure its preservation.

This work was done in collaboration with Inrap, UMR CReAAH, CNRS-INE and Université Paris 1.

|

Generating 3D data for cultural heritage

3D Reconstruction of the Fortified Entrance of the Citadel of Aleppo from a few Sightseeing Photos

Participants: Jean-Baptiste Barreau and Ronan Gaugne

Built at the beginning of the 16th century by the final Mamluk sultan Al-Achraf Qânsûh Al-Ghûrî, the entrance to the Citadel of Aleppo was particularly affected by an earthquake in 1822, bombings during the Battle of Aleppo in August 2012, and a collapse of ramparts due to an explosion in July 2015. Even if compared to other Syrian sites, there are still enough vestiges to grasp the initial architecture, the civil war situation makes extremely difficult any "classic" process of digitization by photogrammetry or laser scanning. On this basis, we proposed in [26] a process to produce a 3D model "as relevant as possible" only from a few sightseeing photographs. This process combines fast 3D sketching by photogrammetry, 3D modeling and texture mapping and relies on a corpus based on pictures available on the net. Furthermore, it has the advantage to be applicable to destroyed monuments if sufficient pictures are available (see Figure 22).

This work was done in collaboration with UMR CReAAH and Inrap.

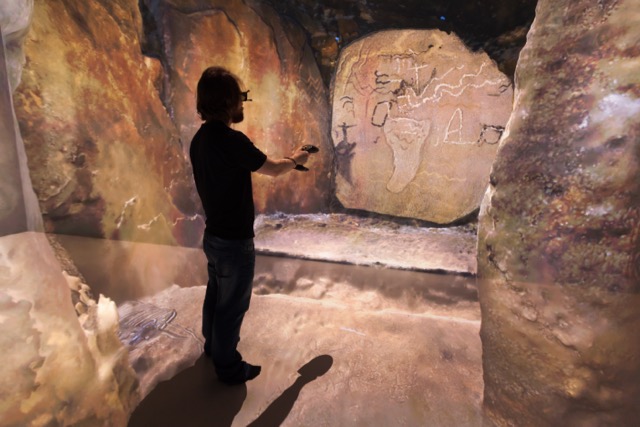

Raising the Elevations of a Megalithic Architecture: Methodological Developments

Participants: Jean-Baptiste Barreau, Quentin Petit and Ronan Gaugne

Elevations have been little studied during explorations of megalithic architectures. For the past ten years, interest in these elevations has been growing in western France, particularly with the application of archeology of buildings and its tools to study them. Megalithic architecture, however, has its own characteristics that make manual surveys difficult. The first step presented in [27] was to acquire a 3D model, precise and manageable, of the whole architecture. Photogrammetry was tested, however the small space made it difficult to photograph. Laser scanner scanning has therefore been preferred. From the cloud of points obtained, a computer processing protocol was developed in order to obtain 2D images of the elevations according to the desired views. On these, a stone-to-stone design is possible in the laboratory and rectifiable directly in the field thanks to the use of a tablet computer. Our method has therefore met accessibility constraints. Above all, it allowed to increase the time devoted to the observation of ground, with a final result identical to a manual survey.

This work was done in collaboration with UMR CReAAH and SED Rennes.